Hi there!

Welcome to the website of Hanyang Kong (孔晗旸). 🌐

I am honored to be pursuing my Ph.D. at the xML Lab @ NUS, advised by Prof. Xinchao Wang since August 2021. My academic journey in computer science began at Xi’an Jiaotong University, where I earned my master’s degree under the mentorship of Prof. Qingyu Yang. 🎓

My research is centered on AIGC (Artificial Intelligence-Generated Content) and LLMs (Large Language Models), with a growing emphasis on 3D/4D Vision-Language-Action (VLA) models—empowering agents to understand, reason, and act in both static and dynamic real-world environments with spatial and temporal depth.

Key research interests include:

- 📊 3D Generation and Estimation: Advancing digital modeling for realistic scene understanding.

- 💡 Diffusion Models and LLM Applications: Exploring generative AI for both creative and practical purposes.

- 🌍 3D/4D Vision-Language-Action (VLA) Models: Developing embodied AI systems capable of perceiving, interpreting, and interacting with complex physical real-world environments.

Thank you for visiting!

I’m open to employment opportunities for Research Scientist/Engineer roles starting in 2026, preferably in USA and Singapore.

🔥 News

- 2025.06: 🎉🎉 Our paper RogSplat: Robust Gaussian Splatting via Generative Priors was accepted by ICCV’25.

- 2025.02: 🎉🎉 Our paper Generative Sparse-View Gaussian Splatting was accepted by CVPR’25.

- 2024.10: 🎉🎉 Our paper EDGS: Efficient Gaussian Splatting for Monocular Dynamic Scene Rendering via Sparse Time-Variant Attribute Modeling was accepted by AAAI’25.

- 2024.07: 🎉🎉 Our paper DreamDrone: Text-to-Image Diffusion Models are Zero-shot Perpetual View Generators was accepted by ECCV’24.

- 2023.07: 🎉🎉 Our paper Priority-centric human motion generation in discrete latent space was accepted by ICCV’23.

📝 Publications

WorldWarp: Propagating 3D Geometry with Asynchronous Video Diffusion

Hanyang Kong, Xingyi Yang, Xiaoxu Zheng, Xinchao Wang

- Asynchronous Video Diffusion: Adapts full-sequence video diffusion model into an asynchronous diffusion process for infinite, pose-guided scene generation from a single image.

- Warped Visual Hints: Uses forward-warped 3DGS renderings as strong, explicit conditions to direct the synthesis of future frames.

- Online Geometric Cache: Ensures cross-chunk consistency by dynamically optimizing the underlying 3D geometry.

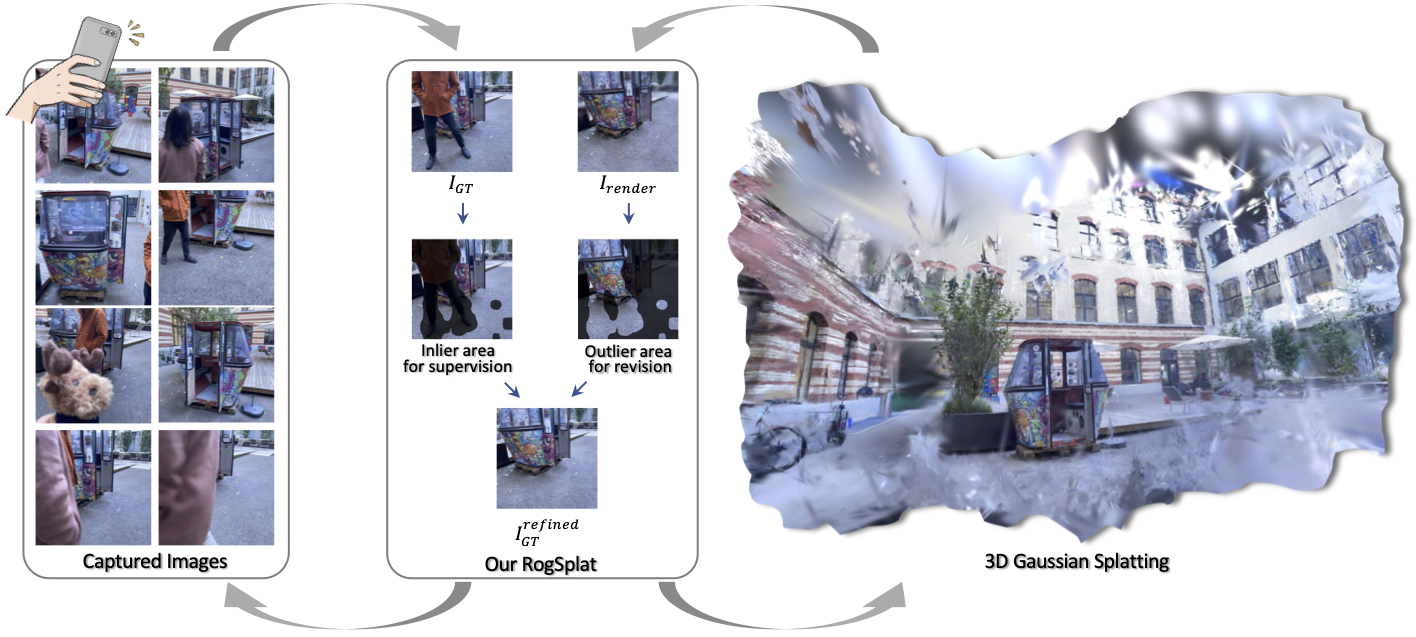

RogSplat: Robust Gaussian Splatting via Generative Priors

Hanyang Kong, Xingyi Yang, Xinchao Wang

- Make 3DGS Work in the Wild: RogSplat fixes real-world issues like occlusion and motion blur.

- Smart Outlier Cleanup: Detects and inpaints corrupted regions with a generative refiner.

- Reliable in Real Scenes: Outperforms prior methods on complex real-world datasets.

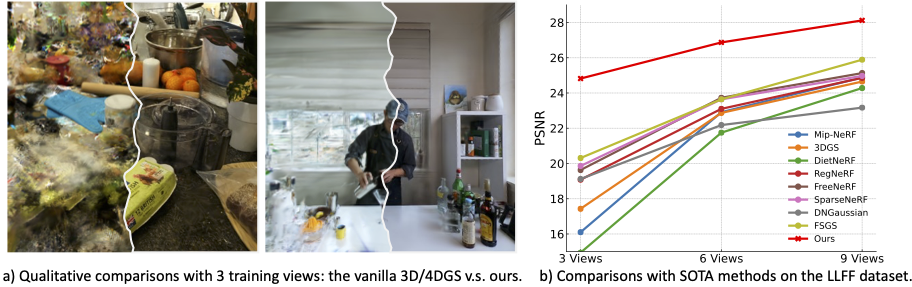

Generative Sparse-View Gaussian Splatting

Hanyang Kong, Xingyi Yang, Xinchao Wang

- Turn Sparse Views into Rich 3D: Boosts 3D/4D Gaussian splatting with diffusion-based novel views.

- Geometry-Aware Consistency: Enforces structural alignment via semantic correspondences.

- More with Less: Matches dense data models using only a few input images.

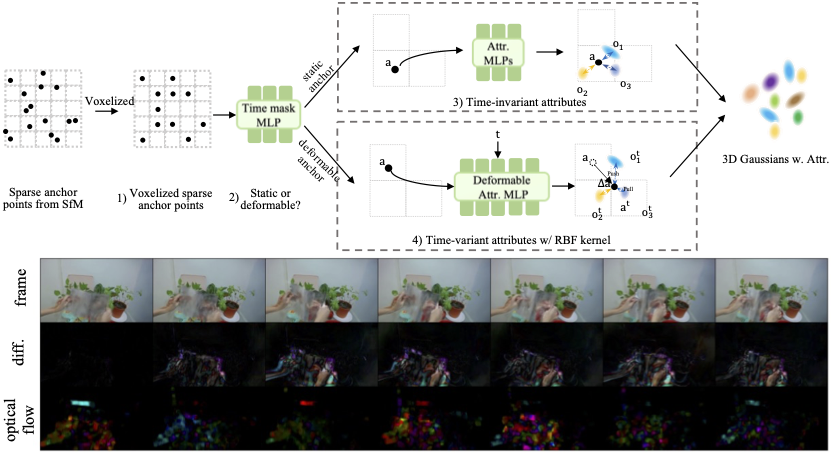

Hanyang Kong, Xingyi Yang, Xinchao Wang

- Voxelized Time-Variant 3DGS: Introduces deformable Gaussian splatting with unsupervised attribute filtering.

- Kernel-Based Motion Flow: Formulates scene deformation using sparse, interpretable flow.

- Faster, Better Rendering: Achieves faster rendering with superior visual quality.

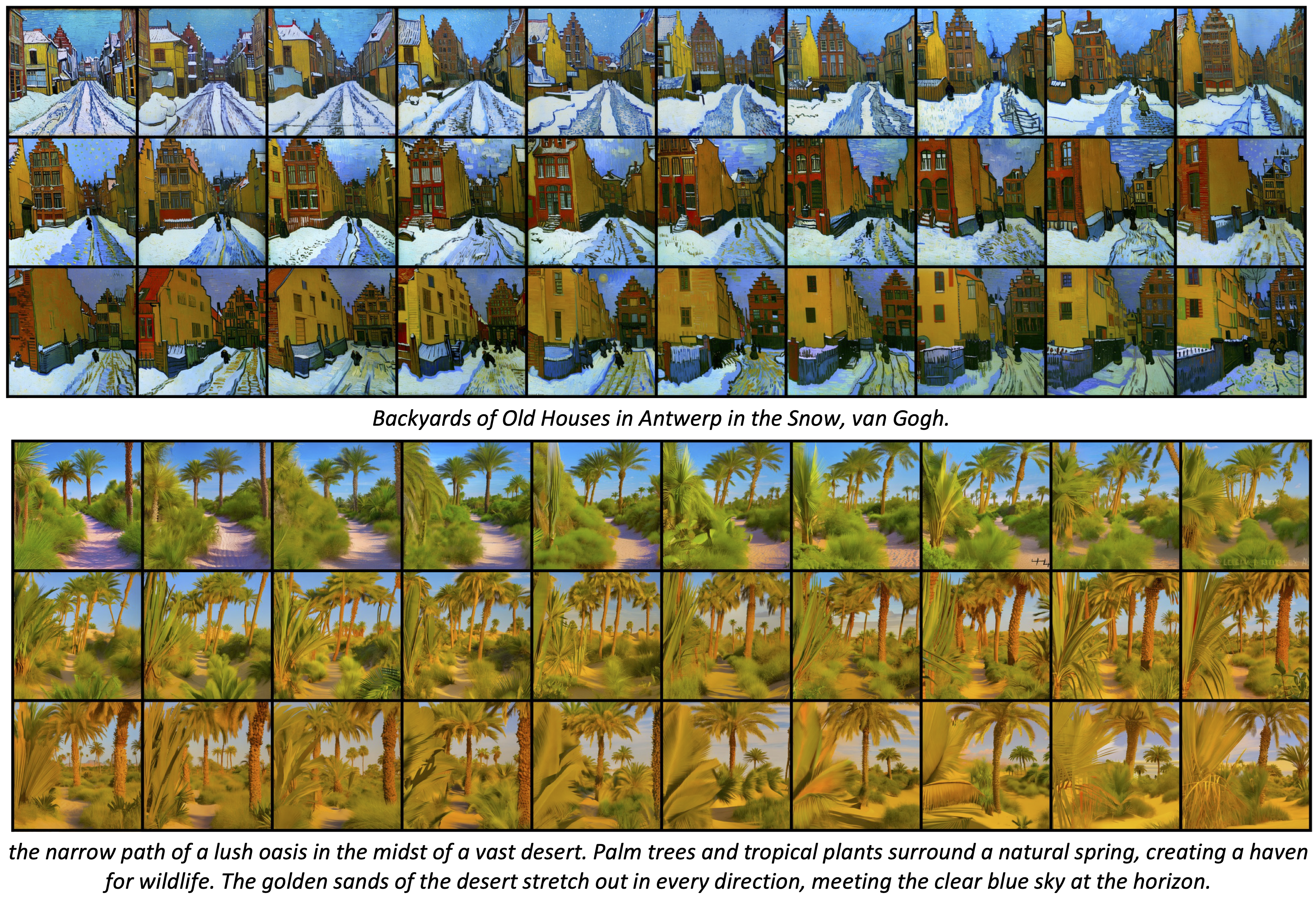

DreamDrone: Text-to-Image Diffusion Models are Zero-shot Perpetual View Generators

Hanyang Kong, Dongze Lian, Michael Bi Mi, Xinchao Wang

- Zero-shot, Training-Free Scene Creation: Generates perceptual scenes directly from text, without specific training for each scene.

- Click-Guided Dreamscapes Navigation: Allows drone flight control through point selection, offering a visually immersive experience.

- Resource-Efficient Scene Generation: Omits the need for a 3D point cloud, enabling faster scene creation with lower computational demand.

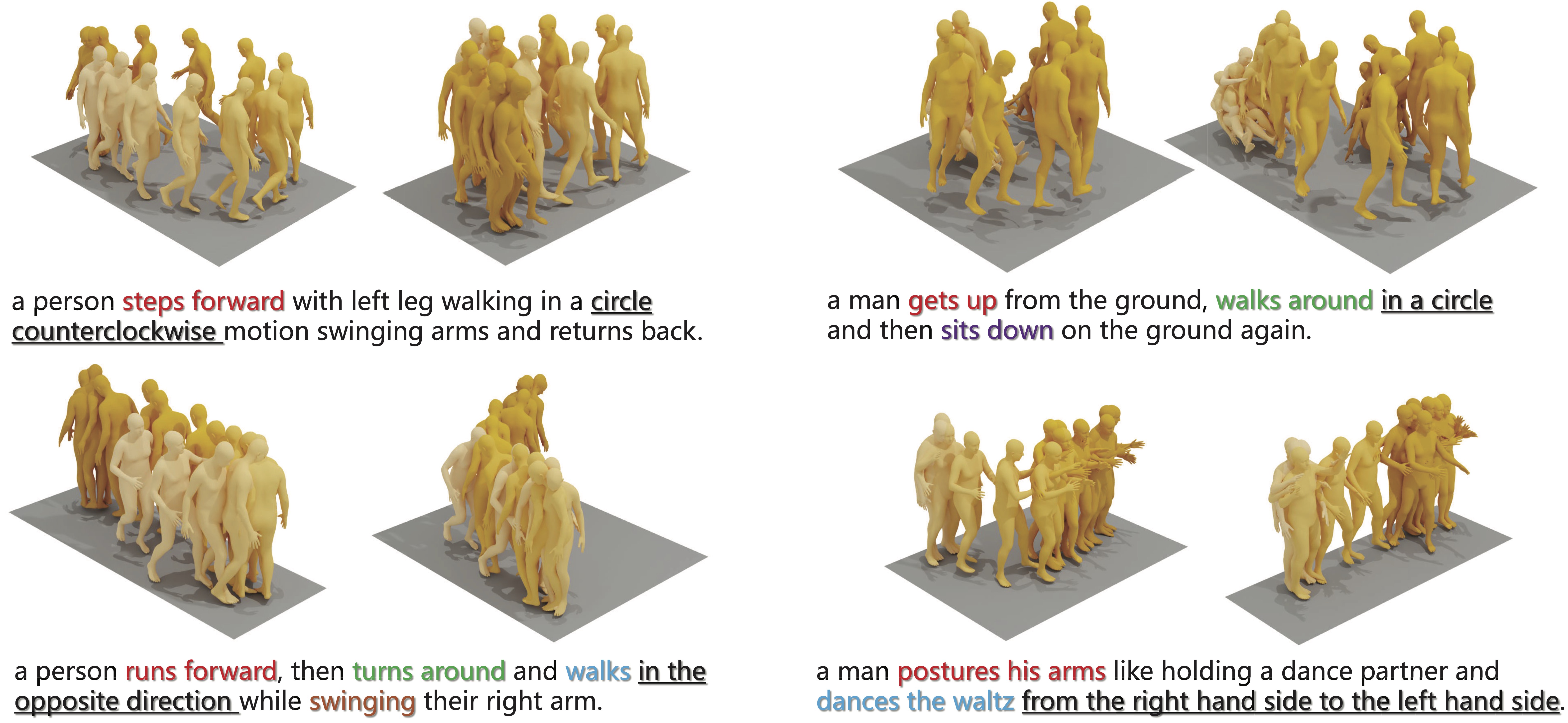

Priority-Centric Human Motion Generation in Discrete Latent Space

Hanyang Kong, Kehong Gong, Dongze Lian, Michael Bi Mi, Xinchao Wang

- Text-to-motion generation in descrete latent space.

- Priority-centric diffusion scheme for the discrete diffusion model.

📖 Educations

- 2021.08 - present: Ph.D. candidate in College of Design and Engineering, National University of Singapore.

- 2017.08 - 2020.06: M.Eng. in Faculty of Electronic and Information Engineering, Xi’an Jiaotong University.

- 2013.08 - 2017.06: B.Eng. in Faculty of Electrical Engineering and Automation, Hefei University of Technology.